Scales up

Scales to 1000s of computers on cloud or HPC

Scales Down

Trivial to use on a laptop

Flexible

Enables sophisticated algorithms beyond traditional big data

Native

Plays nicely with native code and GPUs without the JVM

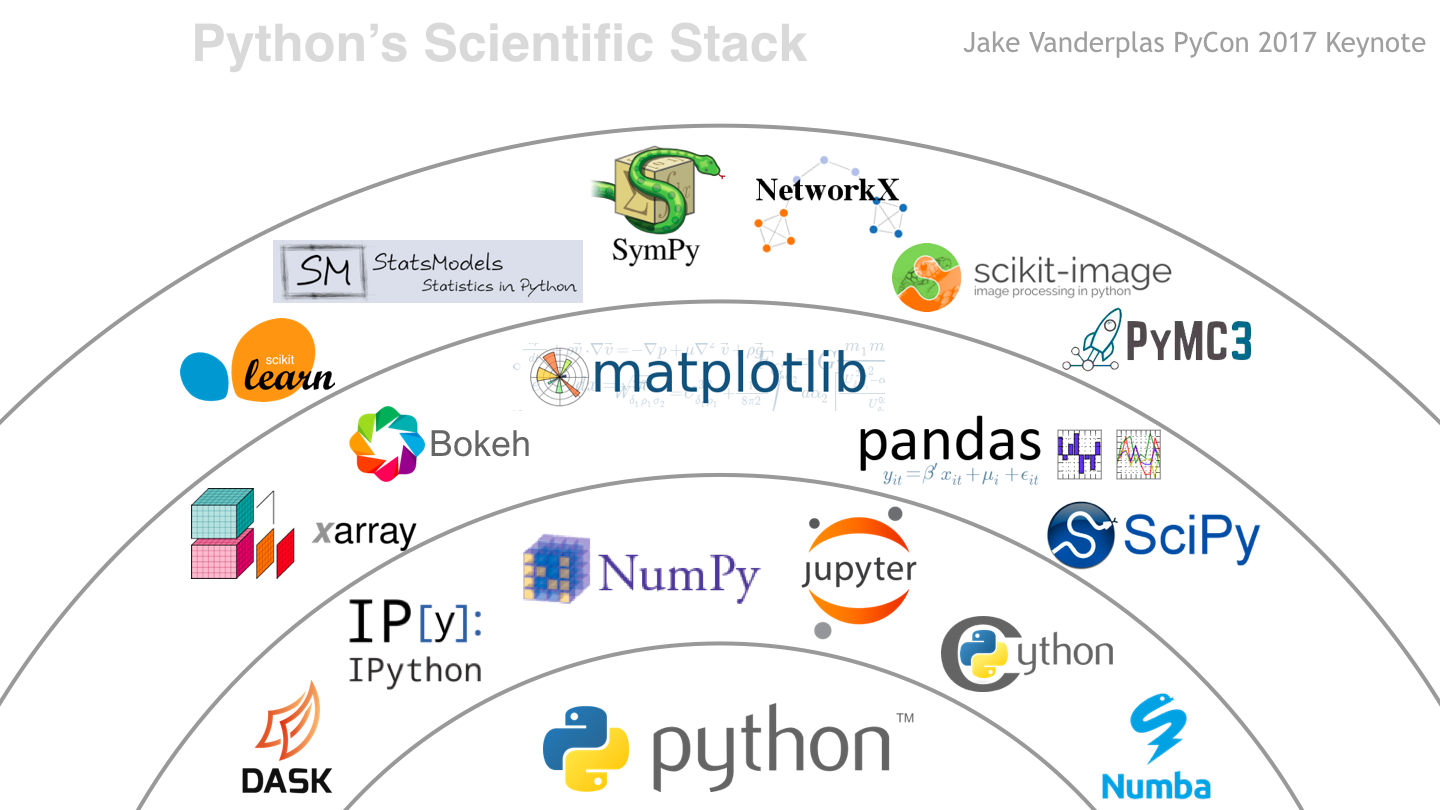

Ecosystem

Part of the broader community

Dask began as a project to parallelize NumPy with multi-dimensional blocked algorithms. These algorithms are complex and proved challenging for existing parallel frameworks like Apache Spark or Hadoop. so we developed a light-weight task scheduler that was flexible enough to handle them. It wasn't as highly optimized for SQL-like queries, but could do everything else.

From here it was easy to extend the solution to Python lists, Pandas, and other libraries whose algorithms were somewhat simpler.

As Dask was adopted by more groups it encountered more problems that did not fit the large array or dataframe programming models. The Dask task schedulers were of value even when the Dask arrays and dataframes were not.

Dask grew APIs like dask.delayed and futures that exposed the task scheduler without forcing big array and dataframe abstractions. This freedom to explore fine-grained task parallelism gave users the control to parallelize other libraries, and build custom distributed systems within their work.

Today Dask is used because it scales Python comfortably, and because it affords users more flexibility, without sacrificing scale. We hope it serves you well.

conda install dask

Learn more